Model Planner and AutoML

Powerful tools to help you design the best neural architecture for your dataset.

Overview

Graph Neural Networks (GNNs) can deliver state-of-the-art model performance when used correctly. However, like many other deep learning techniques, there is no "one size fits all" approach to designing your GNNs. In order to deliver the best performance, GNNs architecture must to be customized to your dataset and problem. For example, on a recent customer dataset, we saw that a well-tuned GNN had 70% better performance than a poorly-tuned one.

Picking the best GNNs architecture for your problem is a difficult task. The landscape of GNN architectures is quite diverse, and there are many choices to choose from. For example, if you are trying to generate product recommendations for customers, you will likely want to use a combination of Identity Aware Graph Neural Network [You et al. 2021] and a Neural Graph Collaborative Filtering [Wang et al. 2019] in order to get the best model quality. However, for other types predictive queries such as LTV, Customer Churn, and demand forecasting, you will need to use very different architectures. Unless you follow latest research in deep learning, it can be hard to know which architecture is best for your specific problem.

In order to help you find the best GNN architecture for your dataset, Kumo provides two powerful tools:

- AutoML: By default, Kumo's AutoML algorithm will analyze your dataset and predictive query, to construct a training plan and GNN architecture search space that is tailored to your dataset. This plan will search a variety of different architectures that are known to work well for your problem space, incorporating the most recent techniques from recent research in the GNN community.

- Model Planner: In situations where you need more control, Kumo exposes a model planner that give you fine grained control over the shape and structure of the GNN for your dataset. Additionally, the model planner will give you control over column encoding, training table generation, and sampling. Using this model planner, experienced data scientists should be able to squeeze out a bit of extra performance when it really matters.

Kumo and AutoML

Whether you're a startup looking to make sense of your data or a large enterprise seeking to optimize your operations, Kumo's implementation of AutoML helps accelerate your model deployment efforts, allowing you to focus on the insights and value generated from your data, rather than the intricacies of model development.

How It Works

Whenever you write a new Predictive Query, the Kumo AutoML system will generate a modeling plan that covers three areas: Column Encoding, Training Table Generation, and GNN Architecture Search.

Column Encoding

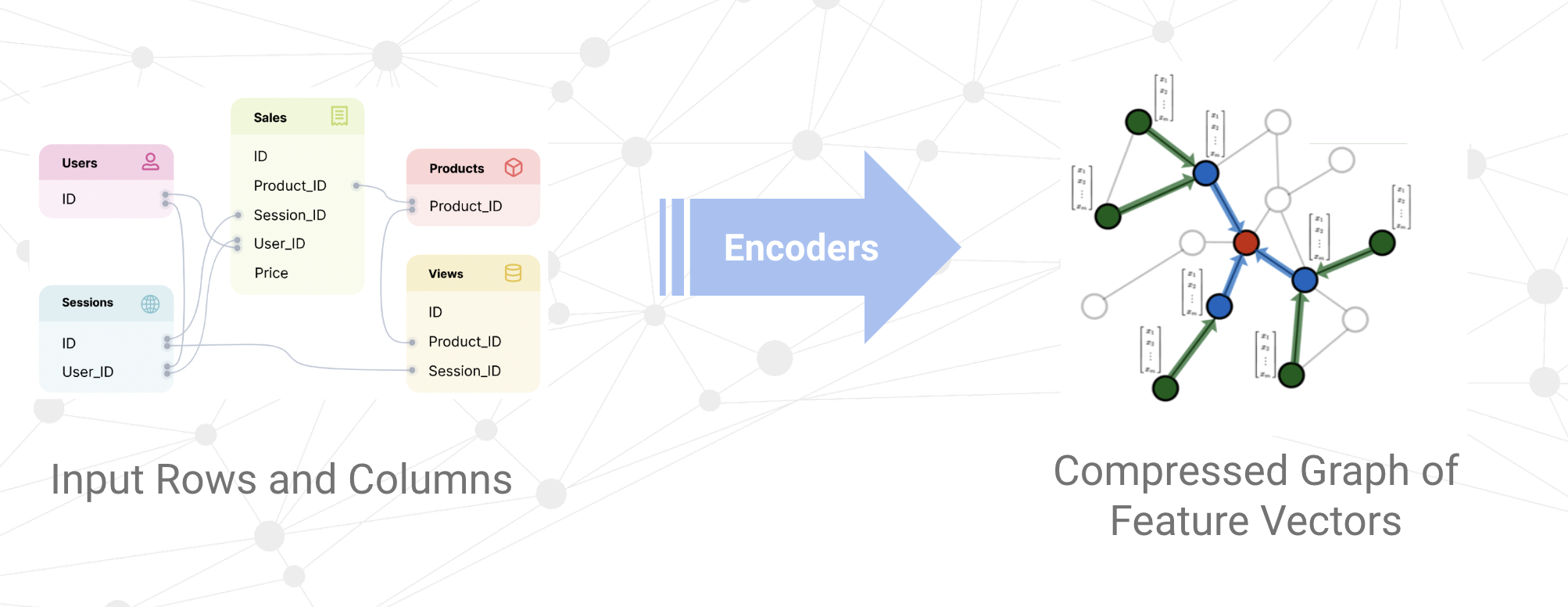

While GNNs largely eliminate the need for manual feature engineering, it is still necessary to perform column encoding to transform your raw tabular data into the actual bits and bytes that get feed into the neural network.

When building deep models by hand, a machine learning engineer would typically need to manually write code to generate features from their raw data and encode them into the neural network. Kumo fully automates this process by using a set of well-tested rules and algorithms to determine the best encoding for each column in your dataset. These algorithms look at many aspects of the columns, including the data type (typically inferred from your data warehouse), the semantic meaning of the column (inferred from the column name), and statistics about the data distribution in the column itself (such as cardinality and kurtosis).

To highlight the complexity of this task, it's useful to imagine the many different ways that an integer column may be encoded. For example, one would need to use the Hash encoder if it represents a high-cardinality identifier such as product_code, Date encoder if it represents a unix timestamp, Numerical encoder if it represents a quantity such as num_visits, or OneHot encoder if it represent a boolean True/False value. The Kumo AutoML algorithm fully automates this process for you, for all possible input data types, including text, numbers, categories, strings, and even arrays.

Training Table Generation

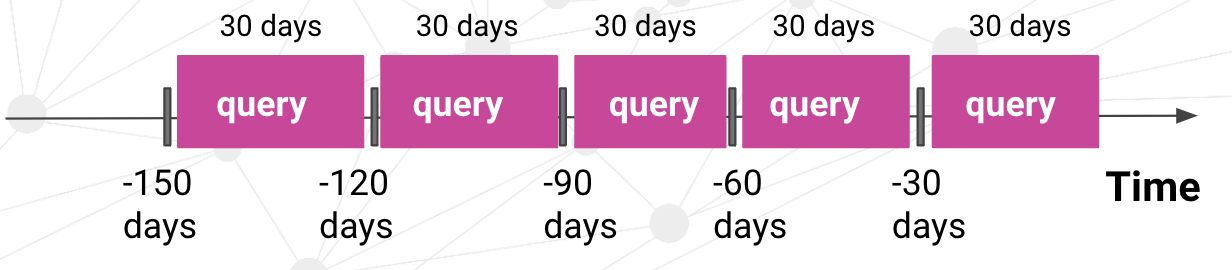

When training a machine learning model, it is generally required to create a training table with multiple data splits, such as train, validation, and holdout. When dealing temporal queries that make predictions about the future, it is critical that these splits are non overlapping and properly ordered, or else you may suffer data leakage that invalidates your results. It is also critical that these splits are well-balanced in terms of size.

Training table generation becomes even more complicated when you are predicting complex events, such as aggregations over time. For example, suppose that you are trying to generate a training table for the following predictive task that predicts events over a 30 day window.

To generate each training example, you need to travel back in time and "replay" the behavior of each user at specific times in the past (sampled at the appropriate rate). This quickly can get messy.

Kumo will automatically generate the proper sampling and training split methodology, based on your dataset and predictive query. Internally, Kumo inspects your data, to compute the optimal sample rates and splits for generating training examples. In the case of temporal queries, it ensures that the holdout split is strictly later in time than the training split, and also ensure that the training splits are well-balanced in size. This way, you get good performance out of the box for any Predictive Query, without worrying about mistakes when setting up your training splits.

GNN Architecture and Hyper-parameter Search

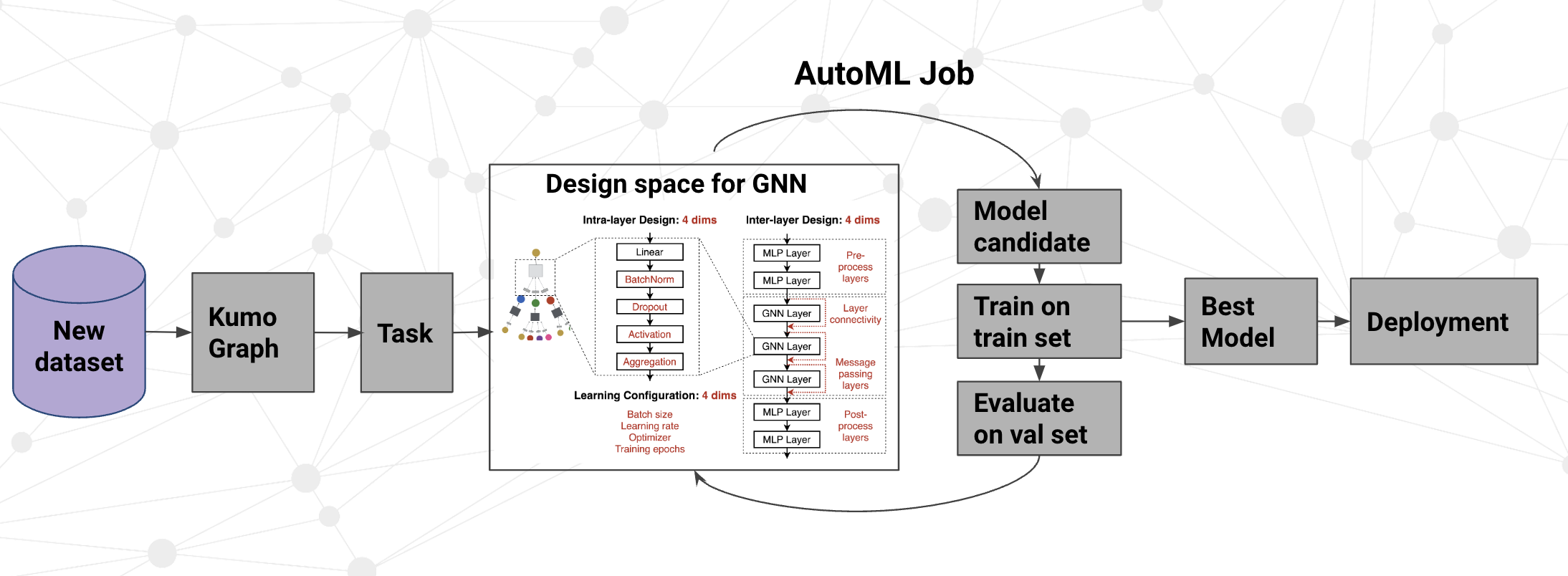

Graph Neural Nets don't refer to a single model architecture, but rather an entire family of model architectures, each with their own pros and cons. Kumo fuses many state-of-the-art GNN architectures, such as GraphSAGE, GIN, ID-GNN, GCN, PNA, and GAT, into a flexible, in-house module that gets the best of all these existing GNN models. Kumo AutoML automatically decides the best model hyper-parameters and training strategies depending on your specific predictive query and dataset. The hyper-parameters affect things such as the neighborhood sampling method, layer connectivity, embedding size, and aggregation methods.

When you run a predictive query, the Kumo Model Planner will generate an AutoML search space, based on your predictive query and dataset. Then, Kumo will run between 2 and 8 experiments to find the set of hyper-parameters that deliver the best performance for your model.

The single winning model architecture and hyper-parameter config is displayed in the UI, so that the user can see exactly what kind of architecture was used. As described in the next section, the user can directly edit the hyper-parameter config to get even more performance in certain scenarios.

Fine Grained Control using Kumo's Model Planner

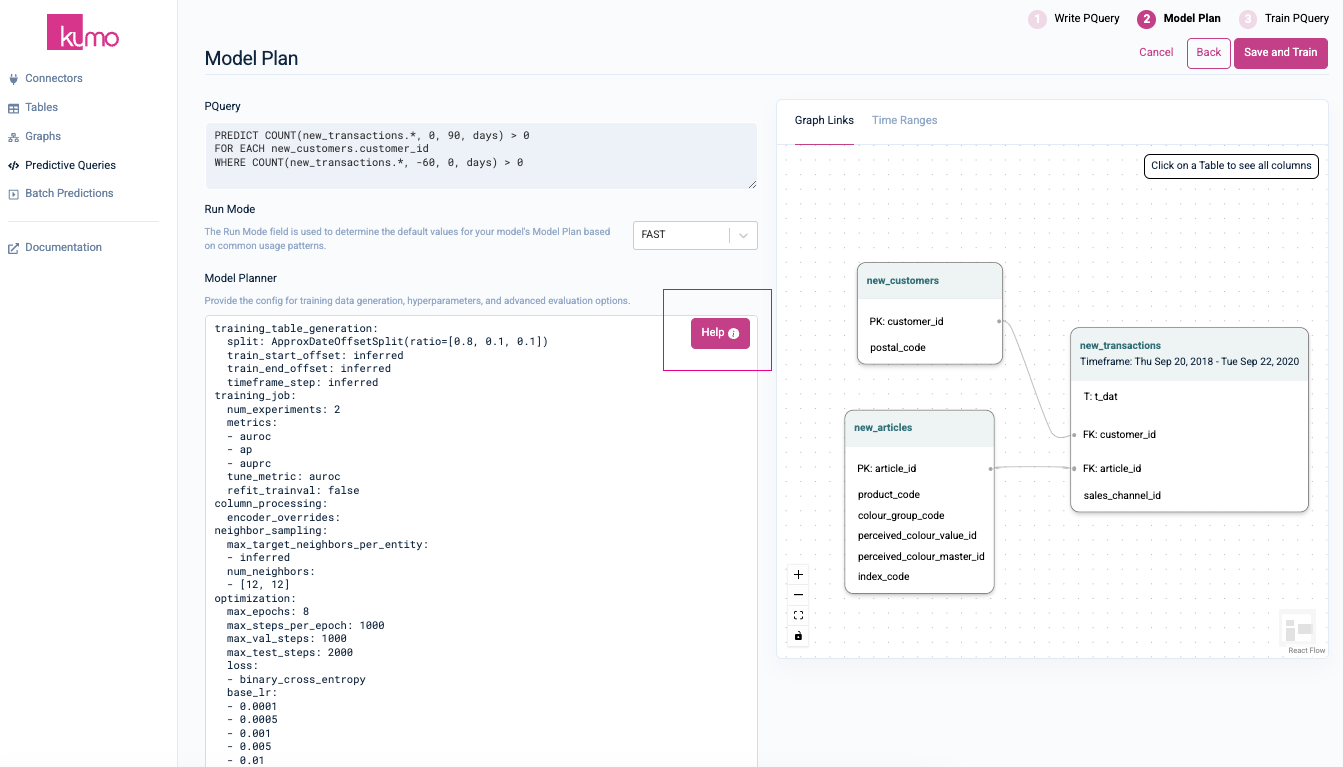

If you need fine-grained control over the Encoders, Training Strategy, or AutoML search space, you can use Kumo's Model Planner. While this is usually not necessary, there are a few situations where you might want to do this:

- Control the Data Split Strategy - The most common reason to use the Model Planner is to control the data split strategy. For example, it is very common to use a

TimeRangeSplitmodule to specify the exact holdout dataset, so that you can compare model performance against an existing model that was trained outside of Kumo. You can also enforce additional constraints that may be needed by your business, such as ensuring that there is a sufficiently large gap between the training dataset and the holdout dataset. - Make your Jobs Run Faster - If you already know what kind of model architecture you want (maybe based on your experience writing similar predictive queries on your dataset), you can use the Model Planner to skip the full AutoML architecture search, and focus on optimizing on a very narrow portion of the search space. For example, if you only run 1 experiment instead of 8, you can make your job potentially 8x faster, and increase your productivity as a data scientist.

- Maximize Performance - Additionally, if an additional 1-5% of performance really matters to you, you can use the Model Planner to eek out more performance, potentially at the cost of increased job runtime or other lost functionality. For example, the default model plan usually caps the # of channels to 256, since beyond that point the performance benefit usually does not outweigh the cost, but you are free to push the limit. Or, if you know what you are doing, you can use something like the "refit" option, which trains over the entire dataset, at the cost of losing eval metrics on the holdout dataset.

- Control the Data Encoding - In certain situations, you may care exactly how your data is encoded before it gets passed into the GNN. For example, for a particular numerical column, you may want missing values to be treated the same as "0". Or, you may want to enable a more expensive NLP encoding method for a particular text column that you feel is particularly important.

- Change the Optimization Method - Out of the box, Kumo will optimize metrics like AUROC, Loss, MAE. However, you can use the

tune_metricoption to change the behavior. This is particularly useful in the case of recommendation problems, where you can use themoduleoption to optimize the recommender for different goals (such as diversity vs recall). - Exporting Embeddings - If you intend to export embeddings to be used as a feature in a downstream model, or as part of KNN lookup in a recommender system, you can modify several options in the Model Planner to ensure that the embeddings will have the desired properties to meet your needs. For example, do you need the embeddings to be stable across model retraining? Do you plan to use cosine similarity to compare embeddings? Or, if you do not care about embeddings at all, you can enable advanced types of GNN architectures (such as ID-GNN) to improve your model accuracy at the cost of not supporting embeddings.

The Model Planner is exposed as part of the Predictive Query creation process. You can click the "Help" button to quickly access Kumo documentation and see all the Model Planner Options.

Updated 2 months ago