Detecting Payback Abuse

Predict which users are unlikely to pay back their loans.

Solution Background and Business Value

Payback abuse is a common type of fraud which arises in buy-now-pay-later platforms, and is closely related to credit card fraud and certain kinds of insurance fraud, although the data may look slightly different in each of these.

Detecting payback abuse task is concerned with transaction level decisions, predicting user intent at a transaction level. Detecting fraudulent behavior in advance and preventing those transactions/orders from going through will prevent losses taken by the business. Machine learning metrics that tie the ML task to business value are $-amount weighted recall@K and precision@K. Without any ML model we find ourselves in the following situation

where unpaid orders are all orders which we falsely classified as licit. Especially since order profit is a small margin of order value in $, it is worthwhile creating a ML model which interrupts high risk orders. After the system is in place (fraudulent cases treated as positive labels):

The gain in profit depends on the balance of losing out on profit from orders which are held back corresponding to false negatives of the model and the $-amount of losses mitigated, which corresponds to the true positives of the model.

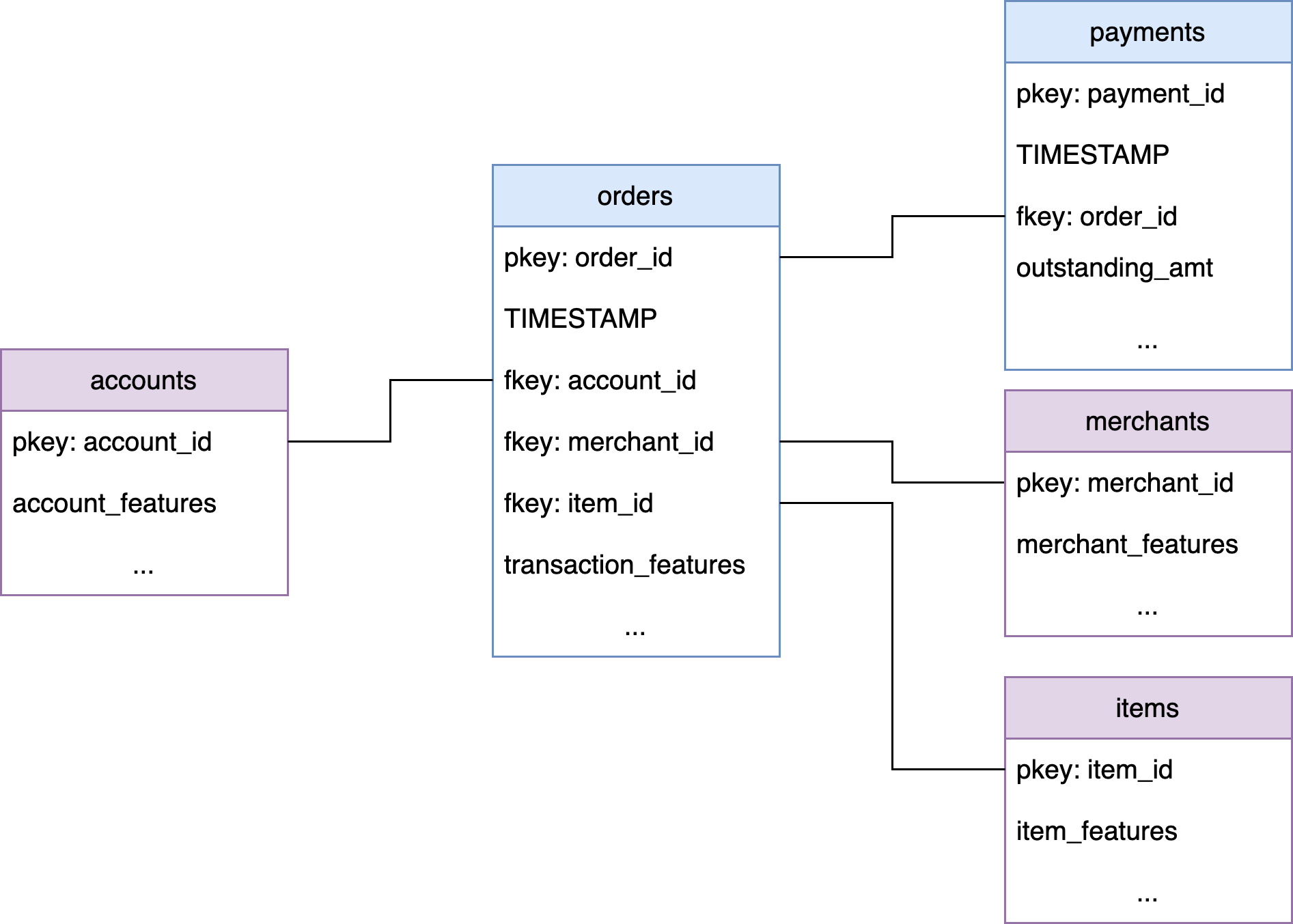

Data Requirements and Kumo Graph

Regardless of approach taken, a core set of tables will be needed to tackle this use case. Below is an non-exhaustive list of what data needs to be included.

Core tables

- Transactions/Orders: The transactions/orders table should include information about a particular transaction, must have a timestamp and account_id so they can be linked to an account.

- Payments: The payments table should include information about payments corresponding to a particular order, payments must contain a timestamp and order_id so they can be linked to a particular order. May include account_ids and be linked to accounts as well.

- Accounts: Static information about user accounts

Additional table suggestions

There are many other tables which can optionally be included, such as:

- (optional) Merchants: Static information about merchants in the marketplace

- (optional) Items: Static information about item’s in the marketplace

- (optional) Account 360: data about account 360, past activity, credit checks, etc.

Predictive Query

The exact predictive query used depends on what we deem as fraudulent orders. If we define a fraudulent order as an order which remains unpaid after X days, we can train a model to predict this behavior as:

PREDICT LAST(payments.outstanding_amt, 0, X, days) != 0

FOR EACH orders.order_idNote, for above PQ to work the payments table has to include an initial 'payment' for each order with outstanding_amt = order value, this way a negative label is generated for all orders which had no additional payments. If the payments table is not formatted in this way, you can instead predict if a customer will have a payment with 0 outstanding amount.

Alternatively, if we want a more flexible definition of fraudulent orders we can generate the labels and store them as a boolean column in the transactions table (0 - licit, 1 - illicit, None - order to be scored), then we train a model like so:

PREDICT order.label == 1

FOR EACH orders.order_idDeployment

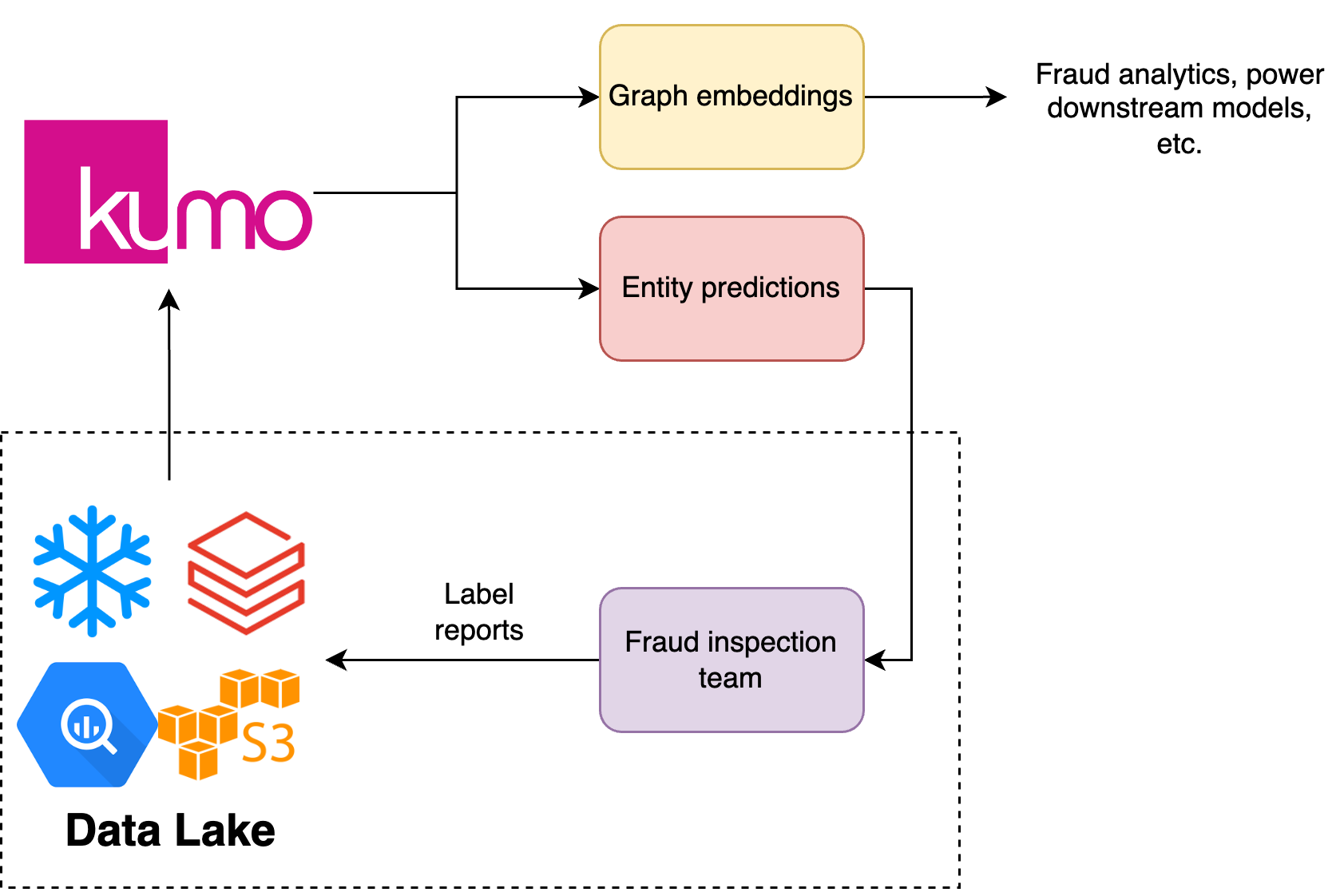

Batch workflow feeding a fraud inspection team

The deployment model depends on the requirements of the detection system. For situations without strict latency requirements we can proceed in a batched fashion.

Producing order level predictions at relevant intervals, for example every 1, 3, 6 hours, daily or every few days. We can easily achieve this by using filters at batch prediction time, setting:

ENTITY FILTER: orders.TIMESTAMP > MIN_TIMESTAMPReal-time Solution with a Lightweight Wrapper

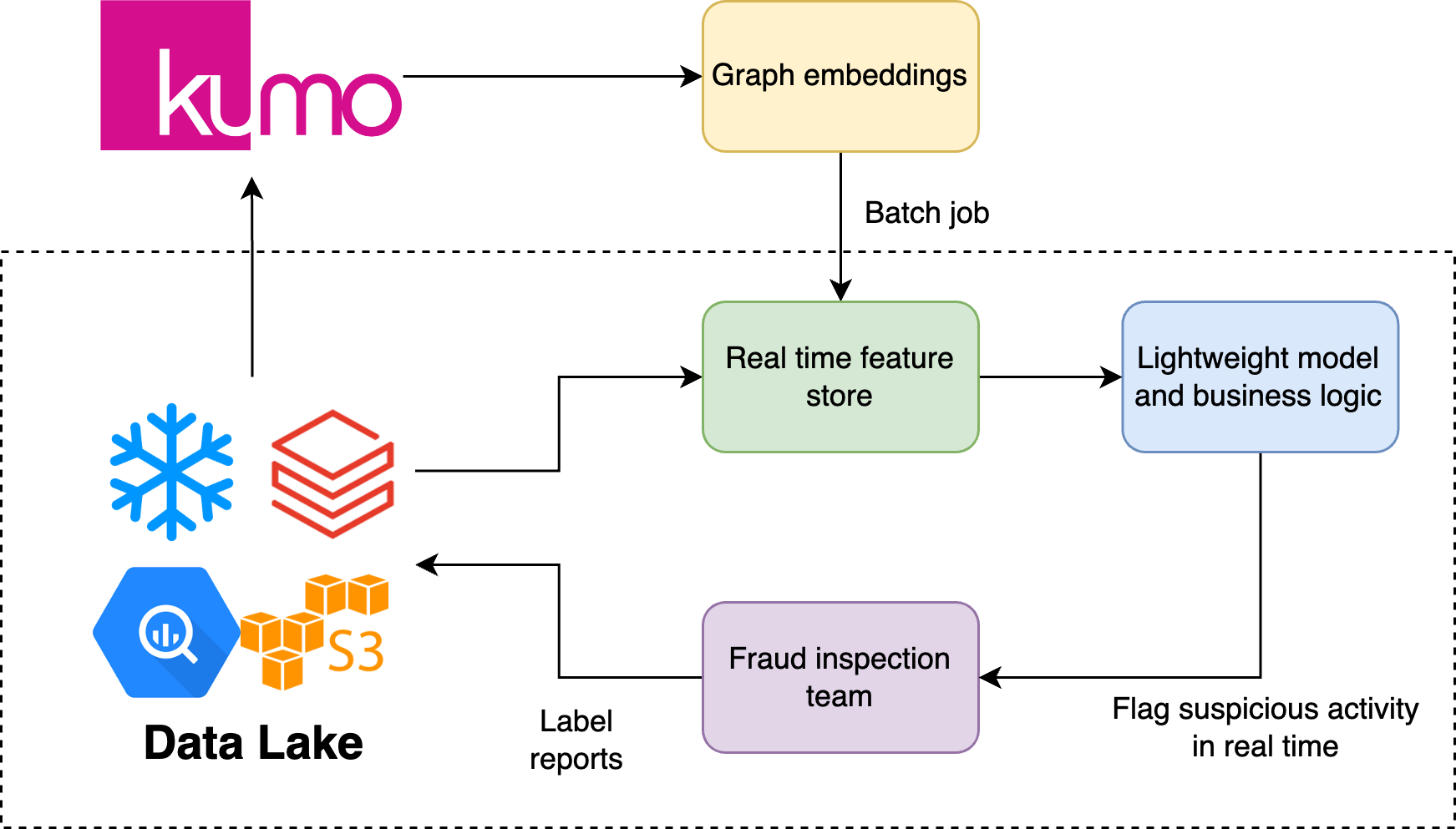

If the latency requirements are too strict to allow for a batch solution, then some additional care is needed. The recommended approach is to restructure the problem, produce Kumo embeddings for relevant entities and combine those entities at prediction time with real-time features to produce real-time scores at the order level.

Updated 2 months ago